How far does technology date back?

February 22, 2023

Modern technology is used in daily to day life. People phones to make calls, computers to send out emails, and headphones to listen to music, but what was the real purpose for technology in the first place?

The abacus was first released into the world around 1100 BCE. It’s believed that the abacus was invented in Asia. Its sole purpose was to help handle problems with various types of units. Mainly for addition, subtraction, multiplication and division. According to www.britannica.com, the tool was commonly used for things like bookkeeping or commercial transactions

The first adding machine was invented by a French mathematician-philosopher named Blaise Pascal sometime between 1642 and 1644. It was called a Pascaline, and it could only do addition and subtraction problems. He created 50 of these arithmetic machines over the next 10 years.

Shortly afterwards in 1671, a German mathematician-philosopher named Gottfried Wilhelm von Leibniz expanded on Pascal’s addition machine, and made it to where it could now also be used for multiplication. It would require the user to continuously add the same number to the equation and move pieces around.

While calculators are great and doing math, the question still remains: when was the first computer invented?

Talk of the first computer did not come until around the 1920’s, but it is important to note that all these “calculator-like inventions” were required to even help mathematicians get this far and learn the possibilities that technology could actually bring.

Around 1820, English mathematician and inventor Charles Babbage saw that the need for a device that could calculate astronomical equations was needed. Hence why he developed a type of calculator called the Difference Engine.

The Difference Engine did so much more than a “modern” calculator could. It was able to produce prints of the data it found, and calculate extensive problems.

The machine was intended to fill up a whole room, but due to financial struggles, Babbage was not able to complete that task.

In the 1930’s, there were two potential routes for computer development. One was the differential analyzer, also created by Charles Babbage, and the other was a long string of devices titled the Harvard Mark IV.

An engineer from the Massachusetts Institute of Technology Vannevar Bush created the first modern analog computer. Titled the Differential Analyzer, this “calculator” was able to solve problems by using a vast knowledge of applications to help solve problems like difficult equations or even just time-consuming problems.

Unlike Bush, professor Howard Aiken from Harvard University also caught wind of Babbage’s work and became interested. He took a slightly different approach than Bush.

He had a large room filled with very large circuit boards that could work at high speeds. Although the power had to be routed manually, it was a very effective machine. He called in Mark IV. This helped to inspire the ENIAC 1943.

The ENIAC machine was huge, and took up an entire room on its own. Taking up 50 feet- by 30-feet, and costing around $400,000, this machine came to be known as the first-generational computer.

It was completed in February 1946, and its first task ever was completing calculations for a hydrogen bomb.

The ENIAC was known as the first official computer to humanity, but it’s critical to realize that computers were originally meant for just simple math calculations and nothing more. Now, there’s all types of computers and different ways to use them.

All fasts and more history about computers can be found on these britannica links.

Pascaline | technology | Britannica

ENIAC | History, Computer, Stands For, Machine, & Facts | Britannica

Difference Engine | calculating machine | Britannica

Computer – Early business machines | Britannica

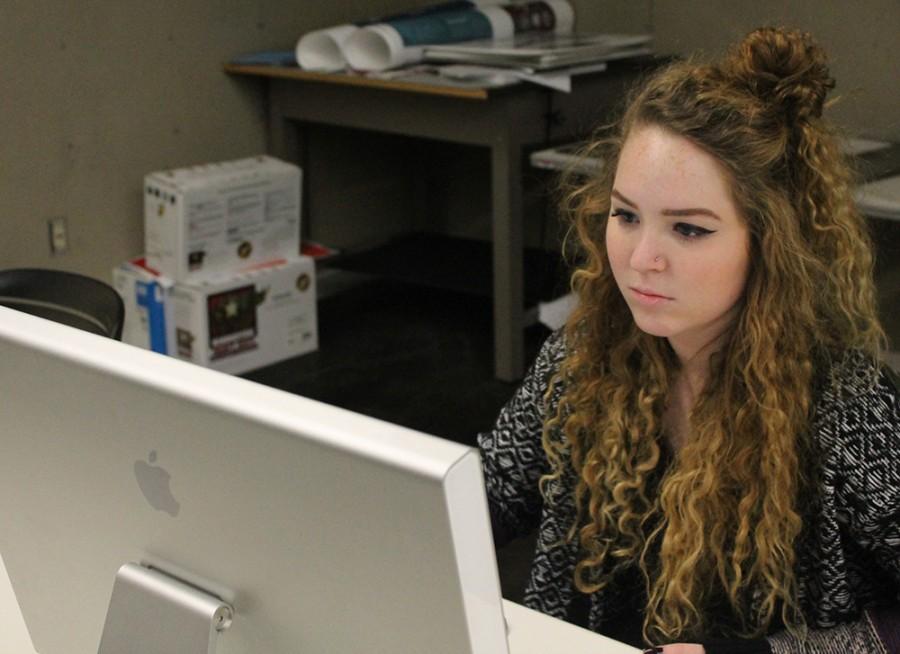

Adriana Hernandez-Santana can be reached at 581-2812 or at [email protected].