Column: Trolley Problem becomes possible reality with driverless cars

January 26, 2017

Last week, I saw a video while surfing through my newsfeed that connected self-driving cars to the ethical dilemma called the Trolley Problem.

The Trolley Problem, first developed by philosopher Philippa Foot, has been adapted throughout time. However, the essence of the problem remains the same:

Imagine you are near a railroad with a train approaching two sets of tracks. On its current track, Track A, there are five workers unaware of the train. On the other track, Track B, there is one worker. You are standing near a lever which could change the train’s direction from Track A to Track B. Do you pull the lever, saving five lives while sacrificing one?

The situation touches on utilitarianism. Although the theory can be expanded, in this example it means saving more lives, no matter the cost, is the better option.

While I am no expert in ethical dilemmas or philosophy, this question and others similar to it have been discussed in a couple of my courses. I thought of it as an intriguing question, but not one I would have to definitively answer. It is almost comical to think of myself randomly approaching a train track and walking into this scenario.

The video, which described the above scenario I was familiar with, ended with a surprising notion. It said this idea of utilitarianism could be applied to self-driving cars. Until viewing the video, I thought this problem was to help individuals develop their own morals, not to be determined by an entire society.

I can see the revised question now: You are riding in an autonomous car and see five people walking across an intersection, leaving you without enough time to break. Is the car programmed to sacrifice the vehicle and your own safety by crashing into a nearby obstacle, or does it continue, injuring five pedestrians in the process?

The unlikely train situation could become an everyday reality with the new invention.

Self-driving cars are made partly to create safer driving conditions. The dilemma is determining what is safe. It is easy to see why some believe sacrificing few for the sake of many is the right approach. At the same time, how much comfort would a person feel knowing they could be collateral damage for something out of their control?

I wholeheartedly supported the invention before the video made this comparison. My best friend died in a car accident. There was no drunk driving and no distractions. Cars are dangerous, but unless something terrible happens to us or someone we know, we view them as a normal and vital part of transportation. I thought self-driving cars were the solution to this problem. I wanted to put my faith in a program to make driving safer.

I never realized it could be at the cost of some humanity. Human error is inevitable, but at least each driver has control of his/her own actions. Utilitarianism not a wrong way of thinking, but it doesn’t align with my current ideas. I know this now after thinking about it in a practical application.

Self-driving cars are not an imagination. Just as my grandparents recall a time where they did not have a mobile phone, I believe I will tell a future generation about the days when I drove my own vehicle.

Whether self-driving cars are in the near future or not, I think it is important to question these ethical dilemmas and determine at what cost does technological advancements test our humanity.

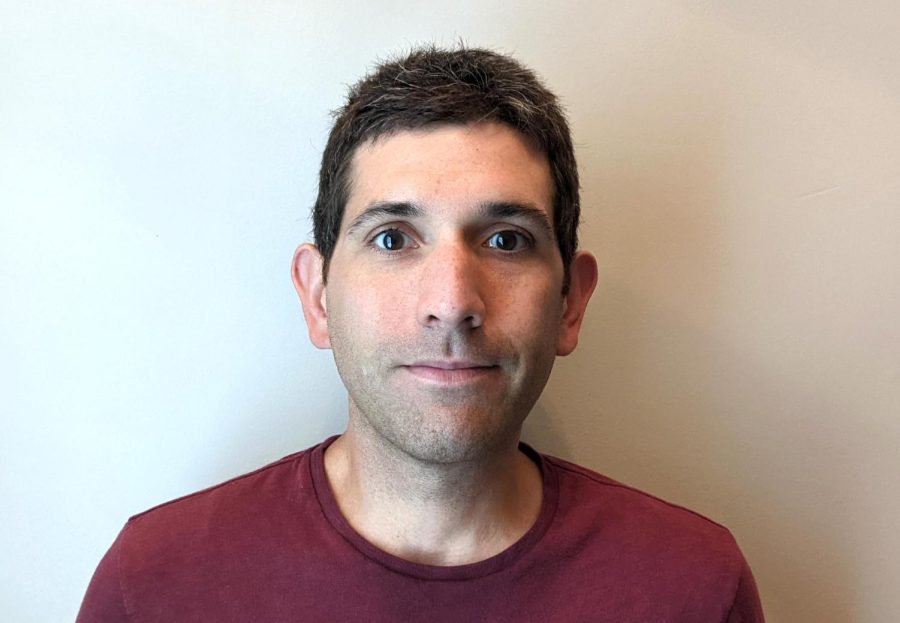

Megan Ivey is a senior journalism major. She can be reached at 581-2812 or [email protected].